On-demand synthetic datasets

And You Thought Training AI Was Hard?

Let us show you how using synthetic datasets makes it easy!

Speed, Cost, Flexibility

You can build a synthetic dataset for a fraction of the cost of a real-world image dataset. Create a 3D scene and a fully labeled image matching your use case in seconds. Easily extend your dataset to match each new edge case throughout your development cycle.

Labelling

Manual labeling of real-world images is a slow and costly process. QA processes are required to filter out abnormal labels. With synthetic datasets, labels are instant, pixel-perfect and bias-free.

Data Collection

Even if possible, in most cases, collecting real-world images is a daunting task. Privacy issues may also complicate the process. Procedural generation of synthetic datasets is a game changer. You create your own images in a few clicks and avoid any privacy issues.

Optimization

Synthetic images easily let you adjust parameters, variance, and distribution for each use case—resulting in efficient, high-performance training models that generalize well.

Winner: Synthetic Datasets!

We think the benchmarks prove it.

And that’s good science.

Research Summary

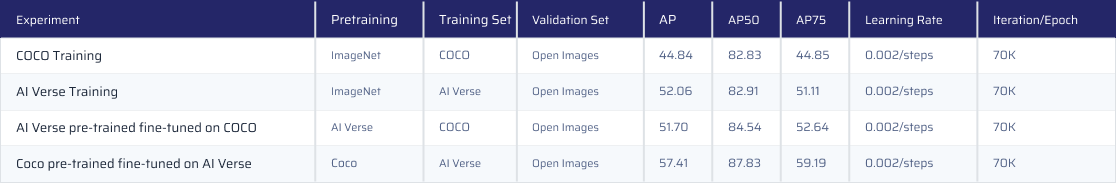

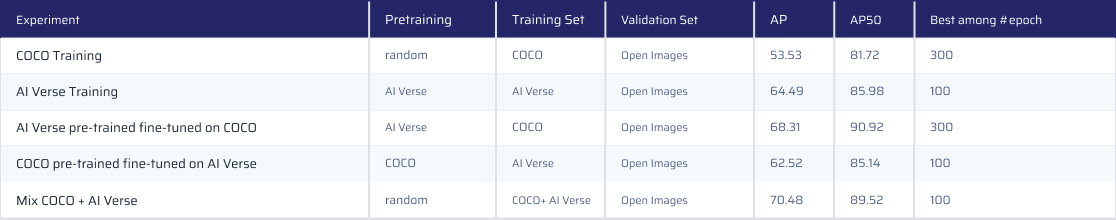

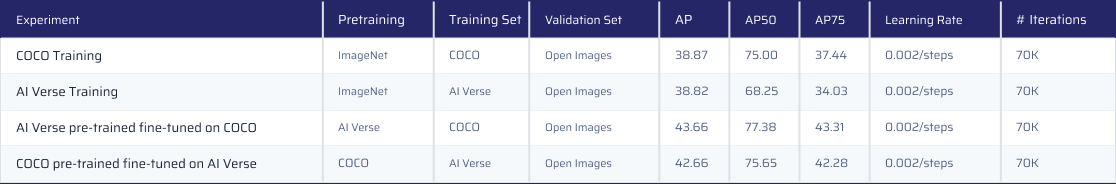

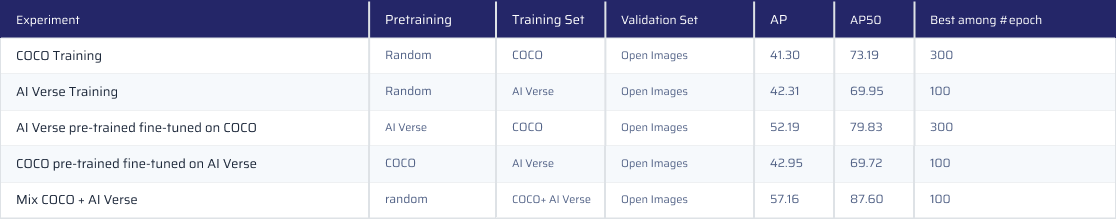

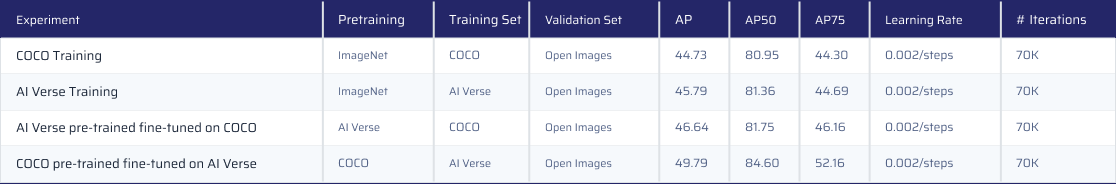

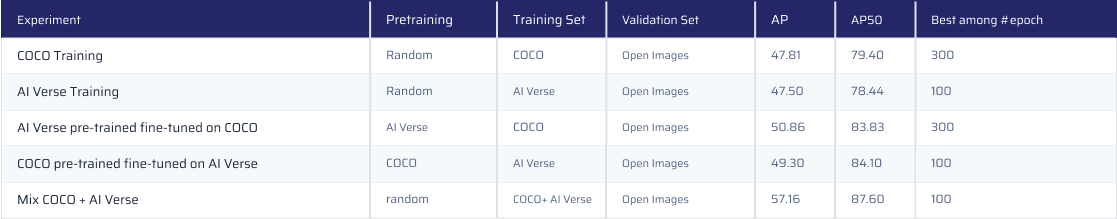

To evaluate the efficiency of synthetic datasets in model training, we conducted a series of benchmarks, comparing training using synthetic images versus real-world images (COCO dataset). As of today, the results were established for two distinct models—YOLOv5 and Mask R-CNN—in three different tasks of increasing difficulty: sofa, bed, and potted plant detection. The benchmark tests were conducted using 1,000 assets from our database.

Procedure

Real-world image training datasets were extracted from Microsoft COCO for each class of interest. We obtained 3,682 images with the label “bed,” 4,618 with the label “couch,” and 4,624 images with the label “potted plant” from the Microsoft COCO dataset.

We used our procedural engine to generate a synthetic dataset for each test. For “beds” detection, we used 63K synthetic images; for “couches,” 72K synthetic images; and for “potted plants,” 99K images.

We also used ImageNet for pretraining models in several experiments.

Validation datasets were constructed from Open Images for each class of interest. We extracted 199 images for the label “bed,” 799 images for the label “couch,” and 1,533 images for the label “plant.”

Conclusions

The domain gap between training and validation sets or real-world images is not exclusive to synthetic datasets. It is a general issue that also exists from real-world images to real-world images.

Synthetic images are generally more efficient than real-world images for training models. However, this might seem counterintuitive because synthetic images are less realistic than real-world images.

However, image realism is not key to training a model due to the domain gap. Instead, variance and distribution of the parameters are the crucial factors in obtaining a model which generalizes well.

Variance and distribution of parameters are not easily controllable with real-world images.

Models may be successfully pre-trained on synthetic images and fine-tuned on real-world images or the other way around. It depends on the task and the model.

Comparison Results

BEDS

AI Verse Synthetic Dataset Sample Images

PLANTS & COUCHES

AI Verse Synthetic Dataset Sample Images